In the dynamic landscape of cloud computing, Amazon Web Services (AWS) shines as a prominent player, offering a plethora of services that empower developers to build robust and scalable applications. Among these services, Amazon S3 (Simple Storage Service) stands out as a versatile solution for storing and managing data objects.

This guide takes you on a journey through harnessing the power of the AWS SDK client for S3. We’ll explore this integration within the context of the Serverless Framework, combined with the efficiency of TypeScript. We’ll build three Lambda functions that showcase different aspects of S3 interaction: generating signed URLs, generating pre-signed URLs, and removing files from an S3 bucket.

By the end of this step-by-step article, you’ll be well-equipped to navigate and leverage AWS SDK client for S3 in your own projects.

Table of contents

- Prerequisites

- Setting Up the Serverless Framework Project

- Navigating the project architecture

- Implementing the AWS SDK Client for S3

- Testing the Lambda functions locally

- Deployment on AWS

- Conclusion

Prerequisites

Before we dive into building the AWS S3 SDK client, ensure you have the following in place:

- An AWS account with appropriate permissions to create and manage Lambda functions and S3 buckets.

- Node.js (v18) and npm installed on your development machine.

- Serverless Framework CLI installed (npm install -g serverless).

- Basic familiarity with TypeScript.

In case you need a refresher or are new to Serverless Framework, you might find it helpful to refer to our previous blog post on A Comprehensive Guide to Building a Serverless REST API with Typescript where we walk you through the steps of initializing a Serverless Framework project.

Setting Up the Serverless Framework Project

Now that you have all the essential tools installed, let’s set up our project. For the purpose of this tutorial, we will refer to the s3uploaderApp.

To setup the project locally, execute the following steps:

- Clone the project : git clone https://github.com/zulbil/s3uploaderApp.git

- Change into the cloned directory: cd s3uploaderApp and run npm install

- Open the project in your preferred code editor.

Navigating the project structure

At this point, you have the project installed in your environment. Let’s take a look at the main architecture of the project by opening the serverless.ts file at the root directory. Inside of it, we do have three lambda functions :

- getSignedUrl

- getPresignedUrl

- removeFile

Each of these lambda functions interact with a S3 bucket called UploaderS3Bucket via S3BucketAccessRole IAM role that grants precise permissions for object operations and logging. As a log monitoring system, we are using AWS CloudWatch.

Next, let’s open the package.json file at the root of the project.

// package.json

{

...

"dependencies": {

"@aws-sdk/client-s3": "^3.345.0",

"@aws-sdk/s3-request-presigner": "^3.345.0",

...

},

...

}

Let’s focus on the dependencies block, we’ll essentially use the @aws-sdk/client-s3 and @aws-sdk/s3-request-presigner to interact with our S3 bucket.

Implementing the AWS SDK Client for S3

In this section, we will take a look on how was implemented the three lambdas function that interact with Amazon S3. The main logic is located within the src folder which contains three subfolders functions, libs and services.

- functions: This subfolder contains the source code for the Lambda functions used in the project. Each function has its own subfolder containing a handler.ts file, an index.ts file that exports the function, and other files that support the function’s implementation, such as mock.json for testing purposes and schema.ts for validating parameters sent to specific api routes.

- libs: This subfolder contains shared library code that is used across the project. It contains various utility files such as apiGateway.ts, handlerResolver.ts, and lambda.ts.

- services: This subfolder contains a s3Helper.ts file which contains all the utilities functions to interact with s3 bucket.

Generate Signed Url: Using aws sdk client s3 to generate secure urls for S3 Objects

The purpose of this lambda function is to generate a signed URL that allows temporary access to a private S3 object via the aws sdk client s3. Let’s take a look at the index.ts file located inside of src/functions/files.

// index.ts

import { handlerPath } from '@libs/handler-resolver';

import schema from './schema';

...

export const getSignedUrl = {

handler: `${handlerPath(__dirname)}/handler.getSignedUrl`,

events: [

{

http: {

method: 'post',

path: 'files/signed-url',

request: {

schemas: {

'application/json': schema

}

},

cors: true

}

}

]

};Overall, this code defines an AWS Lambda function that will be triggered by HTTP POST requests to the specified path: files/signed-url. The function’s logic is located in a separate file and function, and it’s likely intended to generate a signed URL for accessing uploaded files into S3 bucket.

Let’s look at the function implementation, let’s open the handler.ts located at src/functions/files

// handler.ts

import { APIGatewayProxyEvent, APIGatewayProxyResult } from 'aws-lambda';

import { middyfy } from '@libs/lambda';

import {

generatePresignedUrl,

generateSignedUrl,

removeFileFromS3

} from 'src/services/s3Helper';

import { formatJSONResponse } from '@libs/api-gateway';

import { createLogger } from '@libs/logger';

const bucketName = process.env.UPLOADER_S3_BUCKET || 'uploader-s3-bucket';

const logger = createLogger('mediaProcessor');

interface FileRequest {

name : string;

}

export const getSignedUrl = middyfy(async (event: APIGatewayProxyEvent): Promise<APIGatewayProxyResult> => {

try {

logger.info('Generating signed URL', { body : event.body });

const request : FileRequest = event.body;

const { name } = request;

logger.info('Generating signed URL', { name });

const key = `media/${name}`;

const fileUrl = await generateSignedUrl(bucketName, key);

logger.info('Signed URL generated', { fileUrl });

return formatJSONResponse({ fileUrl });

} catch (error) {

return formatJSONResponse({

message : error.message

}, 500);

}

})This code defines an AWS Lambda function named getSignedUrl that generates a signed URL for uploading a file to an S3 bucket. The function is triggered by an API Gateway proxy event. It uses helper functions from the s3Helper module to interact with S3, and the formatJSONResponse function to create consistent JSON responses. Logging is implemented using the createLogger function. The bucketName is obtained from an environment variable, defaulting to 'uploader-s3-bucket' if not provided. The expected request body is an object with a name property representing the file’s name. The function catches errors and returns appropriate JSON error responses. Finally, the middyfy function wraps the Lambda function to handle middleware and streamline the deployment process.

Let’s take a look at this s3Helper.ts file located at src/services folder

// s3Helper.ts

import { createLogger } from '@libs/logger';

import {

S3Client,

PutObjectCommand,

GetObjectCommand,

DeleteObjectCommand,

HeadObjectCommand,

ListObjectsCommand

} from '@aws-sdk/client-s3';

import { getSignedUrl } from "@aws-sdk/s3-request-presigner";

const region = process.env.REGION || 'us-east-1';

const s3 = new S3Client({ region });

const logger = createLogger('s3-logger');

/**

* @param Bucket

* @param Key

* @returns

* @description Function to check if a file exists in S3

*/

async function checkFileExists(Bucket: string, Key: string): Promise<Boolean> {

try {

const headParams = {

Bucket,

Key

};

await s3.send(new HeadObjectCommand(headParams));

return true;

} catch (error) {

console.log('Error:', error);

return false;

}

}

/**

* @param Bucket

* @param Key

* @param expiresIn

* @returns

*/

async function generateSignedUrl(Bucket: string, Key: string, expiresIn: number = 3600): Promise<string> {

try {

const exists = await checkFileExists(Bucket, Key)

if (!exists) {

throw new Error("Trying to generate a signed url for a file that does not exist");

}

const command = new GetObjectCommand({

Bucket,

Key

});

const signedUrl = await getSignedUrl(s3, command, {

expiresIn // Time in seconds until the URL expires

});

return signedUrl;

} catch (error) {

console.error('Error generating signed URL:', error);

throw error;

}

}This code defines utility functions for working with the Amazon S3 service. It uses the AWS SDK for JavaScript (@aws-sdk/client-s3 and @aws-sdk/s3-request-presigner) to interact with S3 and generate signed URLs for accessing objects. The checkFileExists function checks if a file exists in an S3 bucket via the HeadObjectCommand, and the generateSignedUrl function generates a signed URL via the GetObjectCommand for accessing a file in an S3 bucket only if the file exists, otherwise it throw an exception.

Both functions are designed to handle potential errors and provide informative logging.

Generate Pre-Signed Url: Using aws sdk client S3 to craft pre-signed urls for direct uploads

The purpose of this lambda function is to generate a pre-signed URL that facilitate direct uploads to S3 bucket via the aws sdk client s3. Let’s take a look at the index.ts file located inside of src/functions/files.

// index.ts

...

export const getPresignedUrl = {

handler: `${handlerPath(__dirname)}/handler.getPresignedUrl`,

events: [

{

http: {

method: 'post',

path: 'files/presigned-url',

request: {

schemas: {

'application/json': schema

}

},

cors: true

}

}

]

};

...This code defines an AWS Lambda function that will be triggered by HTTP POST requests to the specified path files/presigned-url . It suggests that the incoming data should be in JSON format by respecting the schema specified.

Let’s take a look on the actual implementation of the getPresignedUrl lambda function inside of the handler.ts file

// handler.ts

...

interface FileRequest {

name : string;

}

// Define the Lambda function that generates a signed URL for file upload

export const getPresignedUrl = middyfy(async (event: APIGatewayProxyEvent): Promise<APIGatewayProxyResult> => {

try {

// Log the start of generating a signed URL

logger.info('Generating presigned URL', { body : event.body });

// Parse the request body to extract the file name

const request : FileRequest = event.body;

// Create a key for the S3 object using the file name

const { name } = request;

// Log the file name being processed

logger.info('Generating presigned URL', { name });

const key = `media/${name}`;

// Generate a signed URL for uploading the file to S3

const uploadURL = await generatePresignedUrl(bucketName, key);

// Log the generated signed URL

logger.info('Presigned URL generated', { uploadURL });

// Return a JSON response with the generated signed URL

return formatJSONResponse({

uploadURL

});

} catch (error) {

// If an error occurs, return an error JSON response

return formatJSONResponse({

message : error.message

}, 500);

}

})This Lambda function is responsible for generating and returning a presigned URL for uploading a file to an S3 bucket. It handles potential errors and logs relevant information for troubleshooting. The main function used to generate a presigned URL is generatePresignedUrl.

Let’s see how this function is implemented inside of our s3Helper.ts file.

// s3Helper.ts

import { PutObjectCommand, ... } from '@aws-sdk/client-s3';

...

/**

* Generates a pre-signed URL for an S3 object

*

* @param Bucket - The name of the S3 bucket

* @param Key - The object key (path) within the bucket

* @param expiresIn - Optional parameter specifying the URL's expiration time in seconds (default is 3600 seconds)

* @returns A promise that resolves to the generated pre-signed URL or null if an error occurs

*/

async function generatePresignedUrl(Bucket: string, Key: string, expiresIn: number = 3600): Promise<string | null> {

try {

// Create a PutObjectCommand to represent uploading the object

const command = new PutObjectCommand({

Bucket,

Key

});

// Generate a pre-signed URL with a specific expiration time

const url = await getSignedUrl(s3, command, {

expiresIn

});

return url; // Return the generated pre-signed URL

} catch (error) {

console.log(error); // Log any errors that occur

return null; // Return null if an error occurs

}

}This function provides a way to generate a temporary URL that enables the upload of an object to an S3 bucket, and it handles errors by logging them and returning null. Note the use of PutObjectCommand imported from @aws-sdk/client-s3 to generate the URL that allows someone to upload the object to the specified location within the bucket. The generate URL returned is supposed to expires in 3600 seconds ( Note that this parameter is optional in this case )

Remove file from S3: Using aws sdk client s3 to remove files from S3

The purpose of this lambda function is to remove a specified S3 object via the aws sdk client s3. Let’s take a look at the index.ts file located inside of src/functions/files.

// index.ts

...

export const removeFile = {

handler: `${handlerPath(__dirname)}/handler.removeFile`,

events: [

{

http: {

method: 'delete',

path: 'files/{name}',

cors: true

}

}

]

}This api gateway configuration sets up an AWS Lambda function that handles HTTP DELETE requests. The function’s purpose is likely to remove a specific file from a storage system, and the handler.removeFile function will be responsible for the logic associated with file removal. Let’s take a look at the removeFile function in handler.ts file

// handler.ts

import { ..., removeFileFromS3 } from 'src/services/s3Helper';

...

export const removeFile = middyfy(async (event: APIGatewayProxyEvent): Promise<APIGatewayProxyResult> => {

try {

// Extract the file name from the URL path parameters

const name = event.pathParameters.name;

// Log the attempt to remove a file with the given name

logger.info('Remove file with name', { name });

// Create a key for the S3 object using the file name

const key = `media/${name}`;

// Remove the file from the specified S3 bucket

const fileUrl = await removeFileFromS3(bucketName, key);

// Return a JSON response indicating the successful removal of the file

return formatJSONResponse({

message: `File ${name} removed`

});

} catch (error) {

// If an error occurs, return an error JSON response with a 500 status code

return formatJSONResponse({

message: error.message

}, 500);

}

})

This code defines an AWS Lambda function named removeFile that handles removing files from an S3 bucket. The function is triggered by an HTTP event from API Gateway. When triggered:

- The file name is extracted from the URL path parameters.

- The attempt to remove the file is logged.

- A key is created for the S3 object using the extracted file name.

- The specified file is removed from the S3 bucket using

removeFileFromS3. - If successful, a response is returned indicating the file has been removed.

- If an error occurs, an error response with a 500 status code is returned, including the error message.

Let’s take a look on the removeFileFromS3 function implementation in the s3Helper.ts file.

// s3Helper.ts

import { ..., DeleteObjectCommand, ... } from '@aws-sdk/client-s3';

...

/**

* Removes a file from an S3 bucket

*

* @param Bucket - The name of the S3 bucket

* @param Key - The object key (path) within the bucket

* @returns A promise that resolves to a boolean indicating whether the file was successfully removed

*/

async function removeFileFromS3(Bucket: string, Key: string): Promise<Boolean> {

try {

// Check if the file exists in the bucket

const exists = await checkFileExists(Bucket, Key);

if (!exists) {

throw new Error("Trying to remove a file that does not exist");

}

// Prepare parameters for the DeleteObject command

const params = {

Bucket,

Key

};

// Send the DeleteObject command to remove the file

await s3.send(new DeleteObjectCommand(params));

return true; // File removed successfully

} catch (error) {

console.log(error); // Log any errors that occur

return false; // Return false if an error occurs

}

}

This code defines a function called removeFileFromS3 that removes a file from an Amazon S3 bucket. The function checks if the file exists, and if it does, it sends a command to delete the file using DeleteObjectCommand object. It returns true if the file is successfully removed and handles errors by logging them and returning false.

Testing the Lambda functions locally

To test AWS Lambda functions that interact with Amazon S3 locally, you will use the serverless-offline and serverless-offline-s3 plugins. This allows you to emulate Amazon S3 locally for testing purposes. Inside of our package.json you’ll see these plugins on the block dev dependencies.

// package.json

...

"devDependencies": {

...,

"serverless-offline": "^12.0.4",

"serverless-offline-s3": "^6.2.3",

...

},Let’s open our serverless.ts file and see how the configuration is set up.

// serverless.ts

const serverlessConfiguration: AWS = {

...,

plugins: ['serverless-esbuild', 'serverless-offline', 'serverless-offline-s3'],

custom: {

'serverless-offline': {

httpPort: 3000 // Set the port for the local API Gateway simulation

},

},

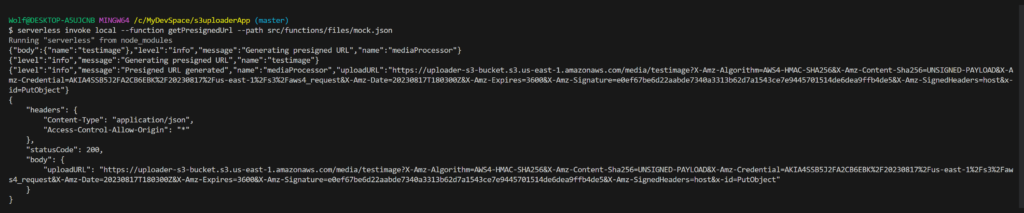

...To test your lambda function locally , you’ll use the serverless invoke local command:

serverless invoke local --function getPresignedUrl --path src/function/files/mock.json

You can see above a screenshot of the test execution including the output and logs that show you how your lambda function behaves locally.

Conclusion

In summary, integrating the AWS SDK Client S3 with the Serverless Framework and TypeScript offers a robust solution for managing cloud storage. This combination enhances code quality, scalability, and efficiency in serverless applications. By following the outlined steps, developers can create agile applications that effectively utilize Amazon S3’s capabilities. Embracing this integration empowers developers to optimize cloud-based storage management while benefiting from the strengths of TypeScript and the Serverless Framework.